The Hidden Costs of Code: What Fortran Taught Me About Resource Efficiency and Climate Impact

How decades-old lessons in programming thriftiness could help us tackle the growing carbon footprint of modern machine learning and data centers

I have a confession to make: I'm not a classically trained programmer—I’m more of a hack. I didn’t go to school for computer science; instead, I studied civil engineering. Did I learn some programming back in those dark ages? Yes, I learned Fortran77 and promptly forgot it the moment I graduated.

Once I started working in the "real world," I picked up programming to automate various tasks. First, it was using Excel’s goal seek and linear regression, then writing Lisp routines for AutoCAD. Eventually, I discovered Python in the early 2010s, and I liked it a lot. Python allowed me to write candlestick charts and read CSV files, making me feel like learning modern programming was finally within my grasp.

Little did I know that I’d end up using Python daily since 2017 in the machine learning field. Python is everywhere. Nearly every data scientist or machine learning practitioner I know uses it for data munging, data science, big data analytics, and increasingly for GPT-related work.

Someone once said Python is the glue that holds the Internet together, and I agree. Yet, when I reflect on my career, I always come back to Fortran—not as the ideal language, nor as a victim of some Stockholm Syndrome—but for what it represents to me: a compact, efficient, and resource-thrifty language.

Fortran 77: Resource Efficiency from the Start

The goal of being thrifty with resources was drilled into me back in engineering school. My alma mater, NJIT, prided itself on giving all incoming freshmen a desktop computer, which we had to assemble ourselves. This was in the early ’90s, before the Internet as we know it today.

If I remember correctly, it was an i286 with two 5 1/4" floppy drives and no hard drive. All your programs had to be loaded into memory, which, if memory serves, wasn’t more than 64 kilobytes. For our assignments, we had to write our Fortran programs compactly so they could load directly into the onboard memory. We then spent hours fixing compilation errors and recompiling.

I thought compiling Fortran was torture, but I never realized how significant that language was for numerical computing. Python’s NumPy package, for example, has Fortran under the hood, as does the OpenBlas library.

Fortran may not be talked about much in my circles, but it remains relevant even today—it's #10 on the September 2024 TIBOE index. NASA still uses Fortran90 in its Global Climate Simulation modeling, taking advantage of its GPU support and parallel execution capabilities.

Wise Resource Management

With the rise of GPT, I often wonder about the environmental footprint of running all these GPUs to build large language models (LLMs). I can’t help but look back on my resource-thrifty Fortran programming days.

Compared to Python, Fortran is far more resource-efficient but harder to write. It takes longer to get things done. On the other hand, if you gave a recent college graduate a laptop with both Fortran and Python environments, they’d probably learn Python faster and complete tasks sooner—at least, that’s what I believe.

Python often gets criticized for not being resource-efficient. The infamous Global Interpreter Lock (GIL) prevents Python from running multithreaded processes efficiently. Workarounds, such as Dask and Cython, were devised to improve Python’s performance. Python 3.11 addresses many of these concerns, and I’m glad to see things moving in the right direction.

This speed-versus-resource debate crosses my mind a lot these days, especially considering the effects of electricity usage on climate change. Data centers and their support systems consume vast amounts of power.

“The largest data centers require more than 100 megawatts of power capacity, which is enough to power some 80,000 U.S. households.” — Energy Innovation via TechTarget.

However, the environmental cost of these data centers goes beyond electricity. As McGovern noted in the TechTarget article, the infrastructure—buildings and cooling systems—also produces a significant amount of CO2 emissions.

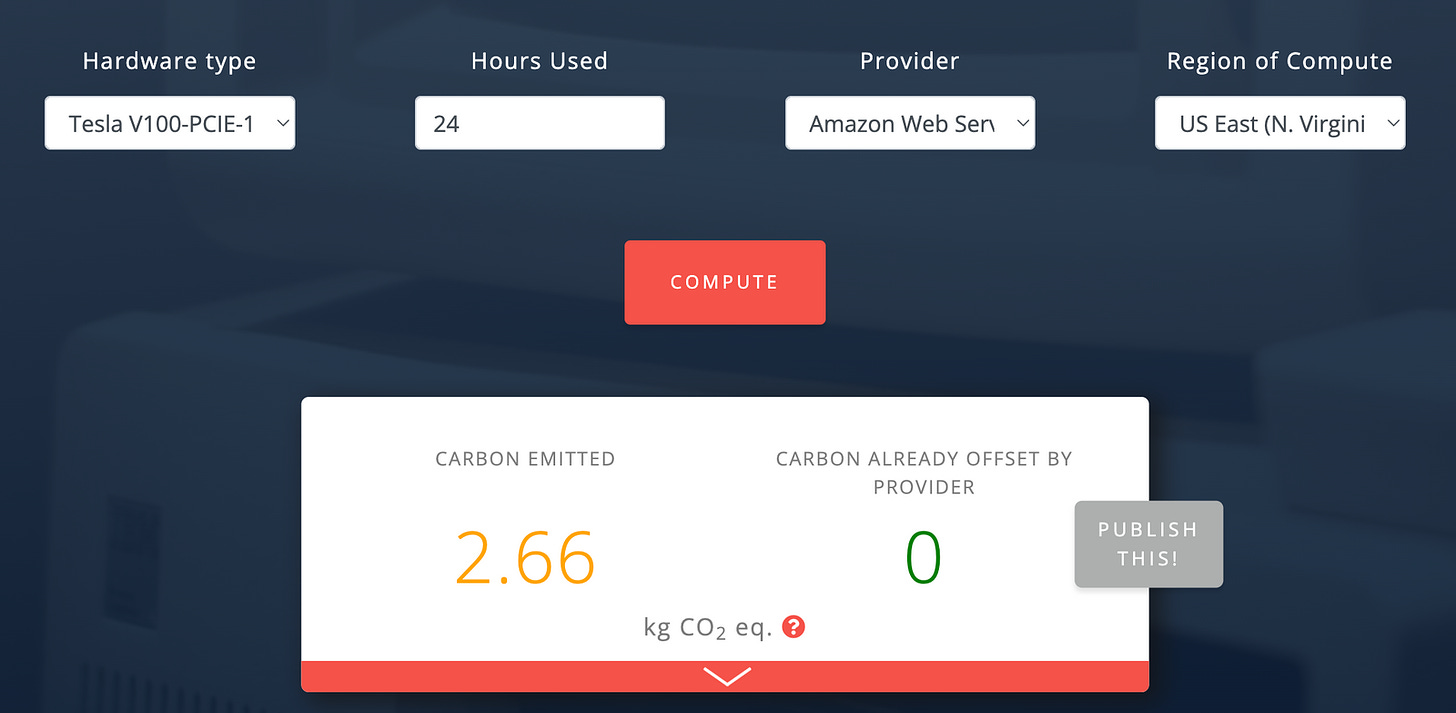

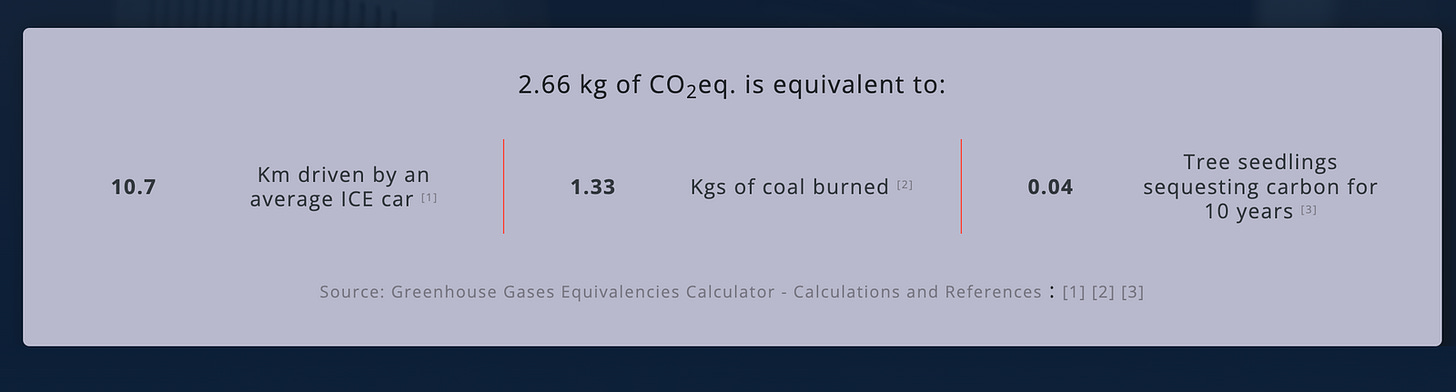

I often wonder how much of a carbon footprint my projects leave behind. How much CO2 is generated when I run Python scripts or leave a GPU instance running overnight? Just running a simple deep-learning experiment on an AWS Tesla V100 for 24 hours in the Virginia region results in a carbon footprint equivalent to driving 6.6 miles.

That's just one experiment over 24 hours. I can’t help but wonder how many miles (kilometers) I’ve "driven" through all my years of tinkering.

Faster, Better, Cheaper?

This has led me to ruminate over whether writing programs faster, executing them quicker, and using fewer resources could reduce carbon footprints. Could we offset our environmental impact by choosing to use GPUs and chipsets more wisely instead of chasing marginal model accuracy or mining cryptocurrency? Would it make a difference?

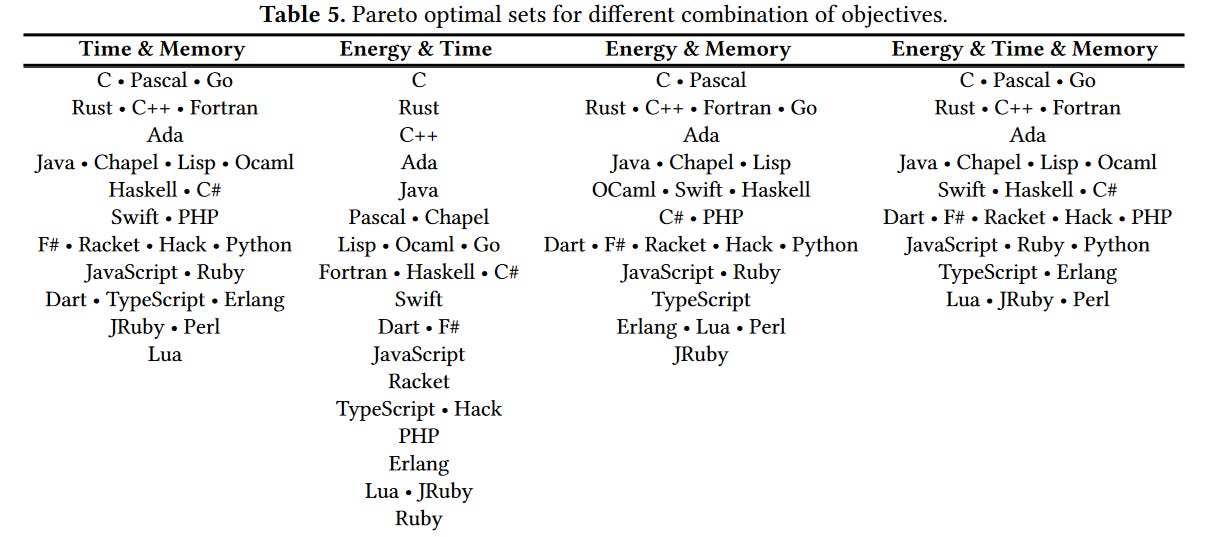

The trade-offs in programming languages also intrigue me. Compiled languages like C, Rust, C++, Ada, and Java consistently rank among the most efficient in terms of energy, time, and memory, while interpreted languages like Python, Perl, and Ruby consume more energy and take longer to execute. In the hands of an experienced programmer, Fortran can still outperform modern languages in certain cases.

But the answer isn’t straightforward—it depends. Each scenario and use case demands different choices. Sometimes, speed is critical, and other times, resource efficiency must take precedence.

Resource Thrift and Climate Change

There’s a scene in Interstellar where Murphy’s teacher tells Matthew McConaughey’s character that the world needs farmers, not engineers because Earth is dying due to a fungal infection destroying the food system. The movie’s message is that technology will save the day—but believing technology alone is the solution is dangerous.

We’re facing a climate crisis, and while technology has improved our quality of life, we need to ask ourselves: at what cost? The environmental impact of our models, data centers, and technology infrastructure is significant.

Is it time to refocus on wise resource management and making deliberate, thoughtful choices about how we code and the data we store? I believe so. We innovators, coders, and technologists have a responsibility to manage our resources efficiently and become more aware of the hidden environmental costs we leave behind.

Additional Reading

For benchmarking languages, check out the Language Benchmarks Game or read this MIT Technology Review article about how LLMs generate CO2.