Weekend Briefing No. 21

Good Saturday morning! Welcome to this Weekend's Briefing. This week we learn that NumPY is proposing some big enhancements for version 2.0, find out what the 7 features for crafting custom LLMs are, and is a search engine augmented with LLMs better than plain old search? Plus, a special infographic for Halloween!

Interesting data points

- As of October 16, 2023, the amount of CO2 in the air is 419.53 ppm, a rise of 0.8% from 2022

- The cost of a dozen large eggs (grade A) in the US is plateauing at $2.065

- Bank credit is plateauing at 17,233.8 billion dollars

NumPY proposed enhancements

If you're a data scientist or an analyst who codes in Python, chances are you've used NumPy. NumPy is a numerical computing package for the scientific community. It's open source and widely used and supports a wide range of hardware and computing platforms.

Now the maintainers and developers of the package want to clean it up for it's version 2.0 release, tentatively scheduled for December 2023!

Here are some proposed enhancements:

- NEP 53 - Evolving the NumPy C-API for NumPy 2.0

- NEP 52 - Python API cleanup for NumPy 2.0

- NEP 50 - Promotion rules for Python scalars

The proposed changes to the NumPy C-API and it's cleanup are the ones I'm most interested in because of the efficiencies that can be gained. I'm looking forward to this next release!

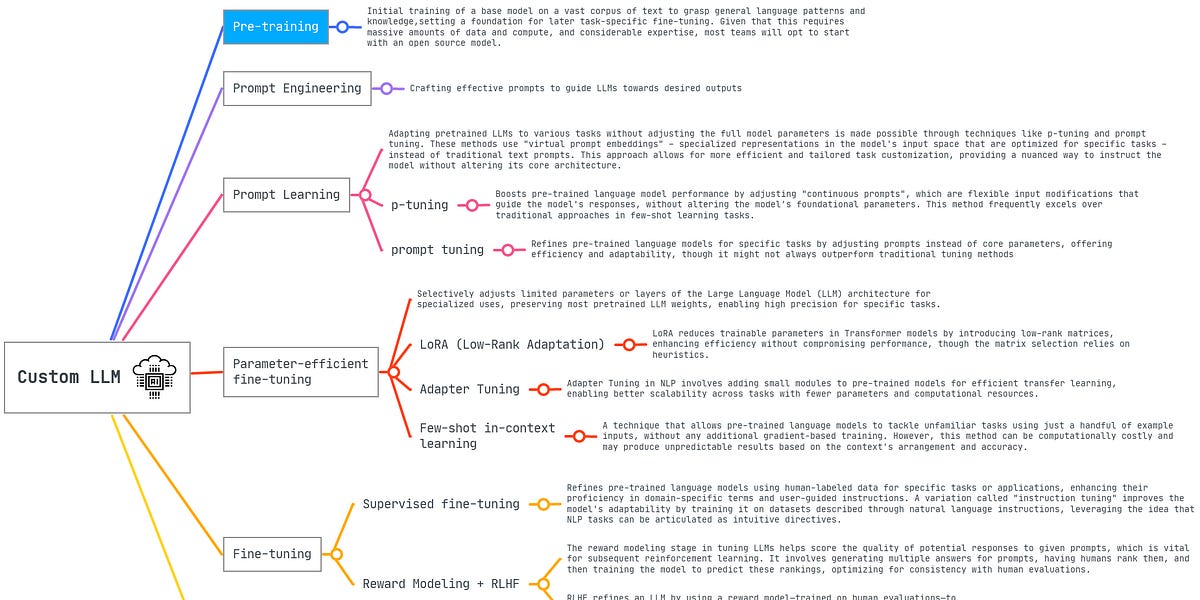

7 Must-have features for crafting custom LLMs

What a fantastic article highlighting the 7 must-haves for you custom LLM and RAG applications. This is a must read for anyone trying to build a LLM type of application in a highly competitive market.

Customizing an LLM isn't just about technical finesse; it’s about aligning technology with real-world applications.

The key features are:

- Having a versatile and adaptive tuning toolkit

- Human-integrated customization

- Data augmentation and synthesis

- Facilitation of experimentation

- Distributed computing accelerator

- Unified lineage and collaboration suite

- Excellence in documentation and testing

FreshLLMs: Refreshing large language models with search engine augmentation

Researchers have shown that LLMs + Google Search generate results better than just a plain old Google Search. The created a new dataset of 600 questions used to evaluate a broad range of reasoning abilities for the LLM and Google. In the end they created FRESHPROMPT and FRESHQA.

FRESHPROMPT significantly improves performance over competing search engine-augmented approaches on FRESHQA, and an ablation reveals that factors such as the number of incorporated evidences and their order impact the correctness of LLM-generated answers.

If you ask me, this makes sense. Integrate LLMs with a search engine application and you should see better results. I would call this integration "Beyond Search" and it bears keeping a close eye on.

Franchises with the most horror films

Just in time for Halloween, @idlecrowdesigns gives us the franchises with the most horror films. The Ring scared the hell out of me when I first saw it!

Help me reach my BHAG!

Hi friends, I have a very Big and Hairy Audacious Goal (BHAG) for the end of the year, I want to reach 1,000 newsletter subscribers! I'm asking for your help to get this done so if you liked this newsletter (or any of the past articles), please share it using one of the sharing icons below. Thank you!

Member discussion